Ever since vSAN 8 was announced, I’ve been waiting to try out the new Express Storage Architecture in my HomeLab, especially since the internal storage in my hosts is NVMe only.

Yesterday the last piece of the puzzle was released, namely the new USB Network Native Driver v1.11 for ESXi 8 which I needed before reinstalling my hosts in order to get vSAN traffic isolation.

Armed with the new USB NIC drivers, I reinstalled my three of my four hosts with ESXi 8 and then manually installed the USB NIC drivers. One of the reasons I wanted to do a reinstall, was to get rid of the external USB connected SSD boot medium I have used since the lab was set up. One of the problems I had with running vSAN 7 in this environment, was that on two of the hosts I have, the device used as a cache device for vSAN, randomly went offline under heavy load. The device just went AWOL, until the host was rebooted and then it was OK again, until the next time.

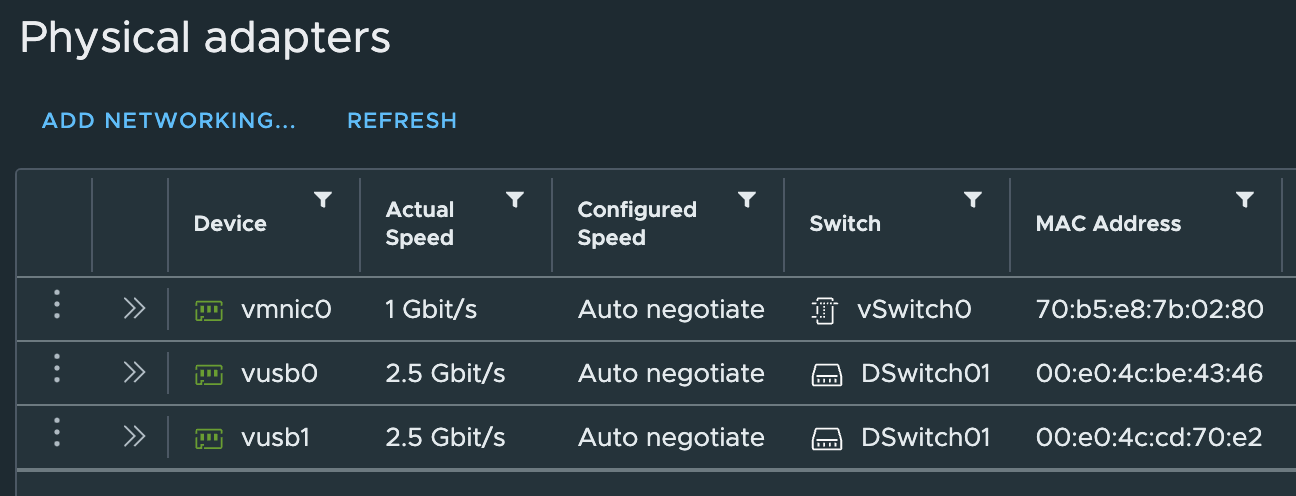

At the same time, I moved all my vSAN traffic out from my 1 GbE switch, and over to a new QNAP QSW-1108-8T 8-Port 2.5Gbps Unmanaged Switch. The new networking setup, and switch, is dedicated to vSAN and vMotion traffic through a new a VDS, with one Port Group for each traffic type, and with two uplinks. Uplink 1 is configured as the active uplink for the vMotion PG with Uplink 2 as a Standby Uplink, and the reverse setup for vSAN traffic.

This networking tweak means I do not need to use VLANs for the vMotion and vSAN traffic anymore (the new switch doesn’t support it anyway), as well as a throughput increase from 1 GbE to 2.5 GbE at the same time. I have four hosts in total, so this little 8-port switch now gets a run for it’s money (and it does run a bit hot, it seems)

The last host was then reinstalled, and I decided to try out using vSphere Lifecycle Manager to create an Image containing the USB NIC fling and use then remediate the host, which also worked out perfectly.

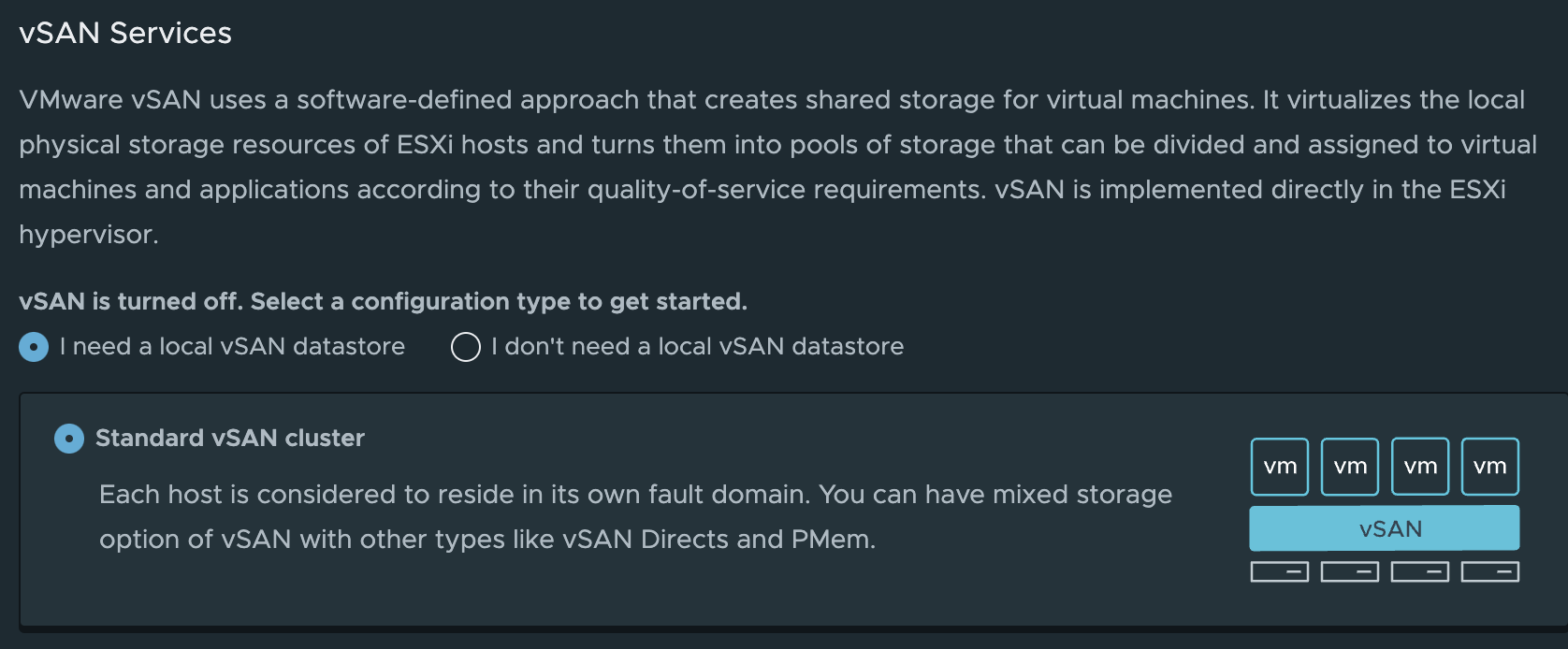

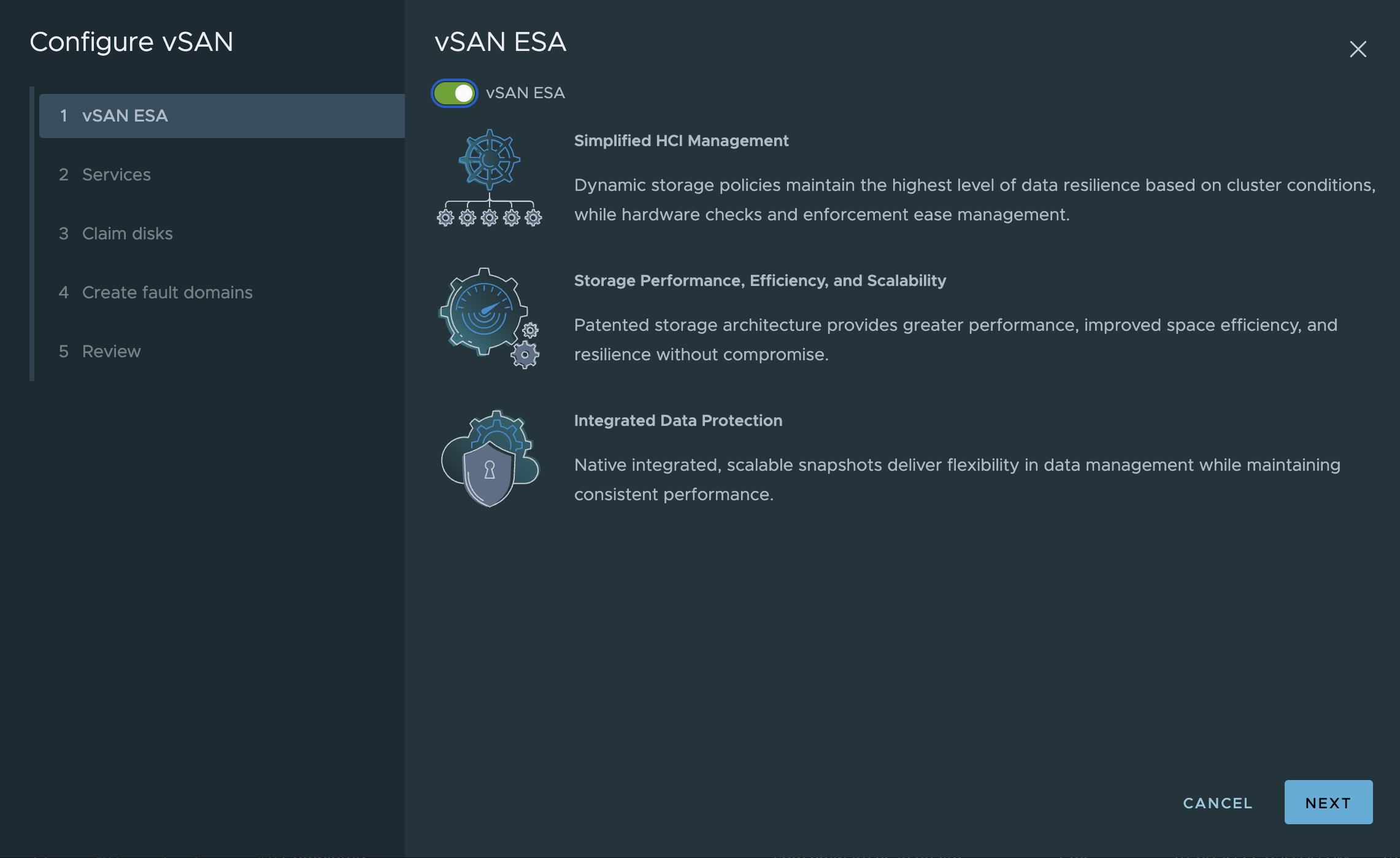

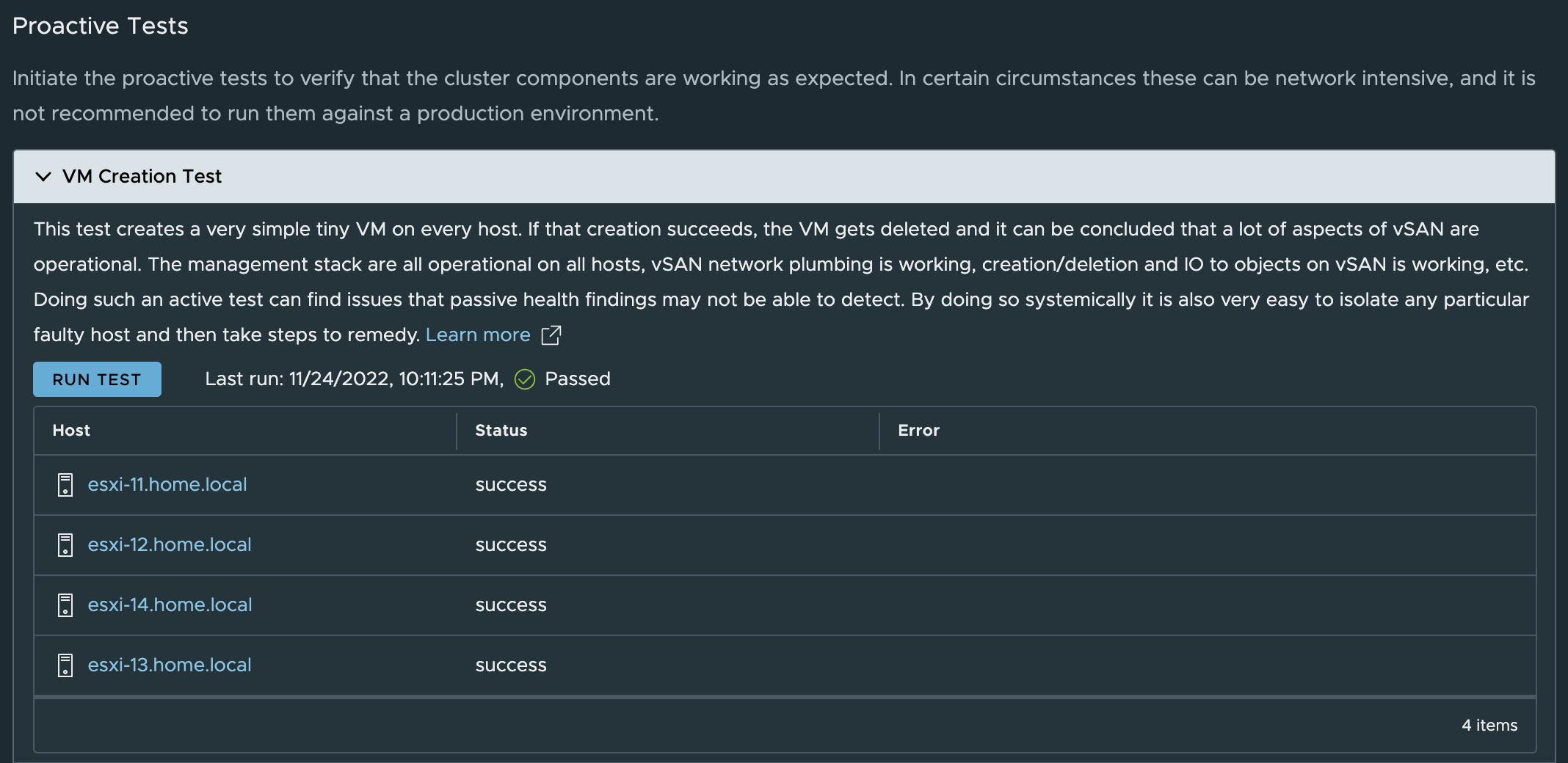

Once the hosts were installed and configured, it was time to enable vSAN and try out the new Express Storage Architecture. vSAN ESA is configured in the same way as the traditional OSA version, you just select that you want ESA at configuration time.

Configuring vSAN 8 ESA#

Selecting ESA is just a little tick box, and off it goes.

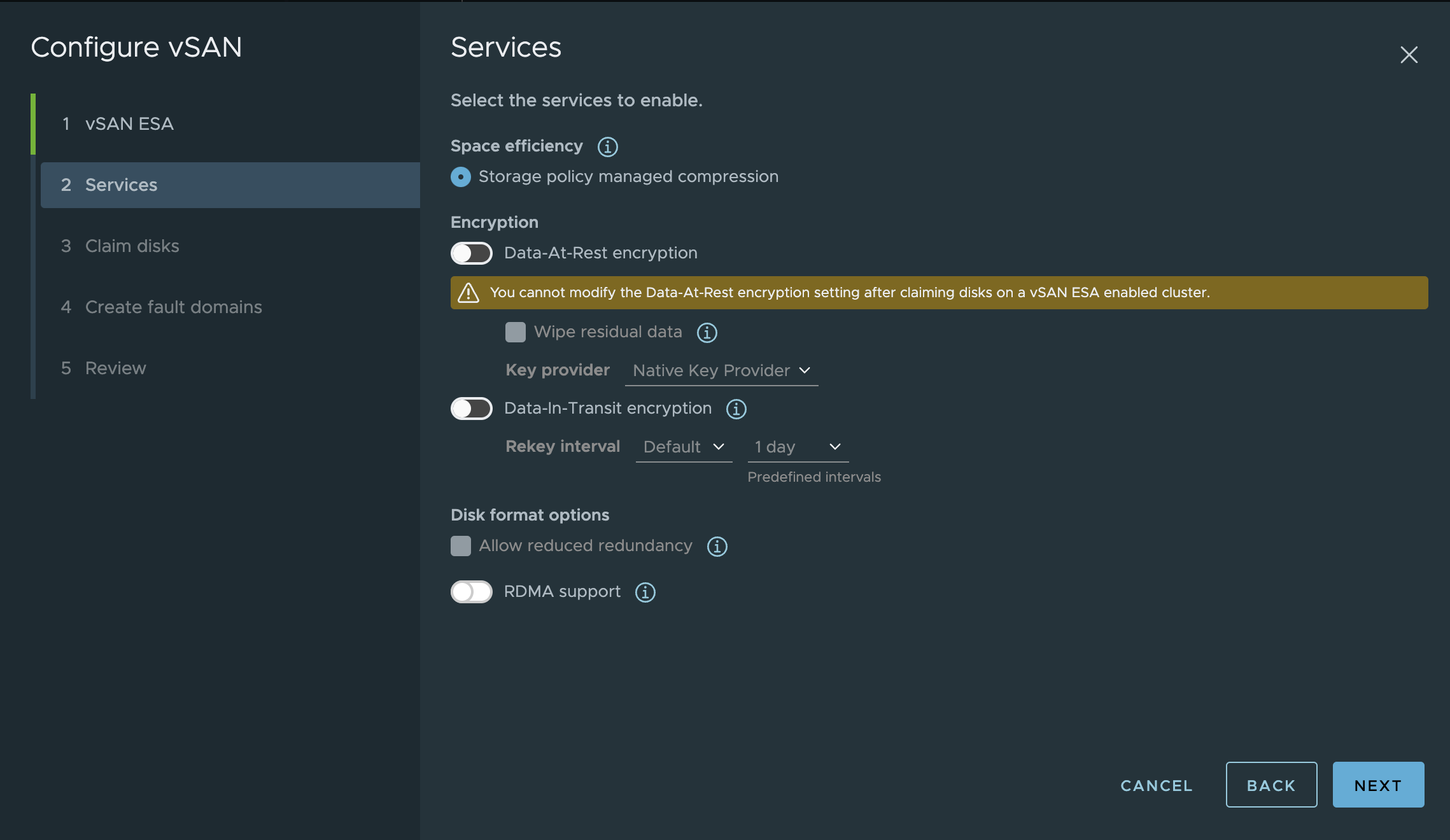

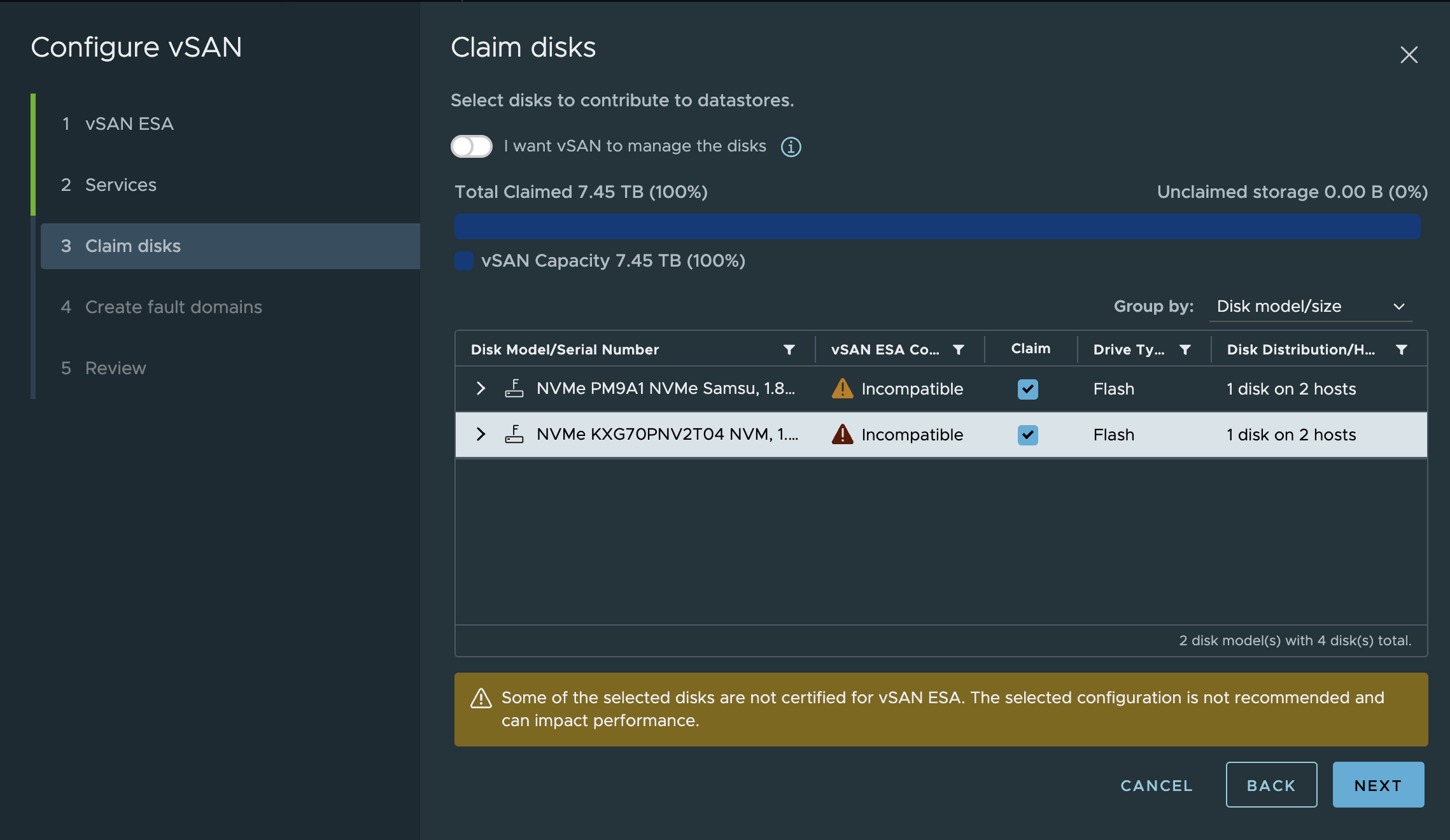

As shown in the screenshot above, my NVMe devices are listed as incompatible (eg. not on the HCL), but that doesn’t stop the configuration from going forward. I manually claimed the disks, and continued the setup.

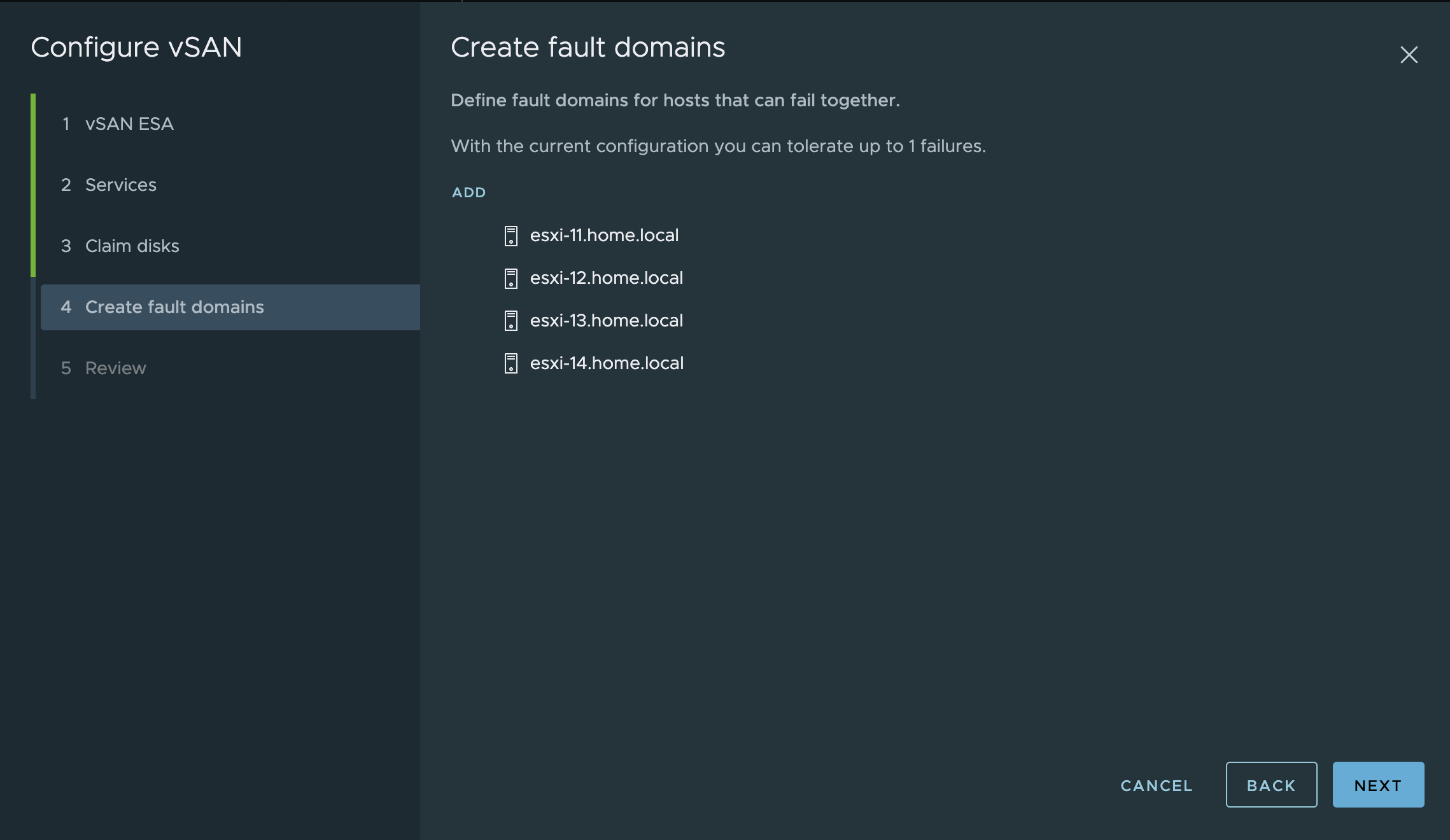

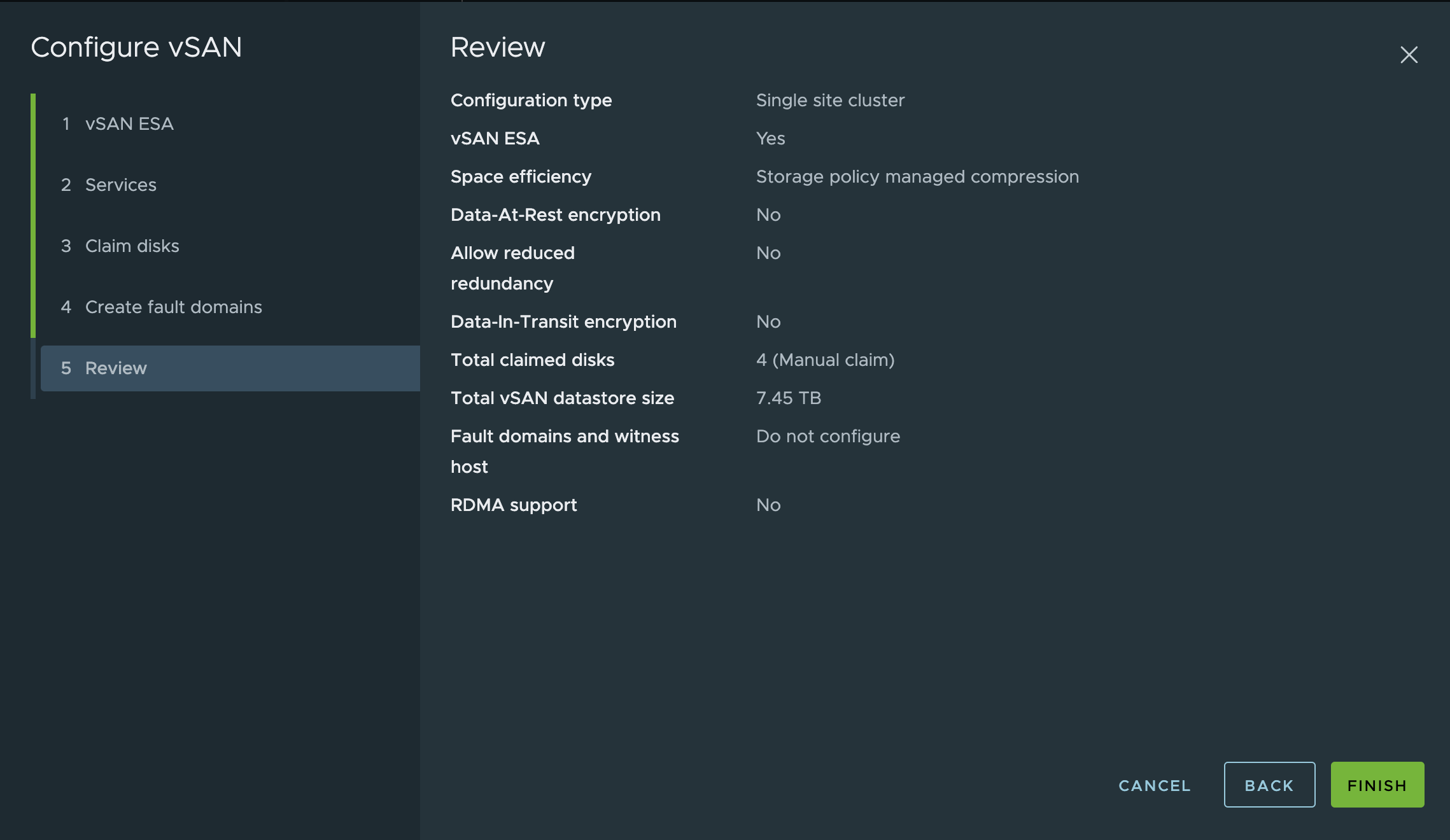

I didn’t configure any custom fault domains, and moved on to the review screen

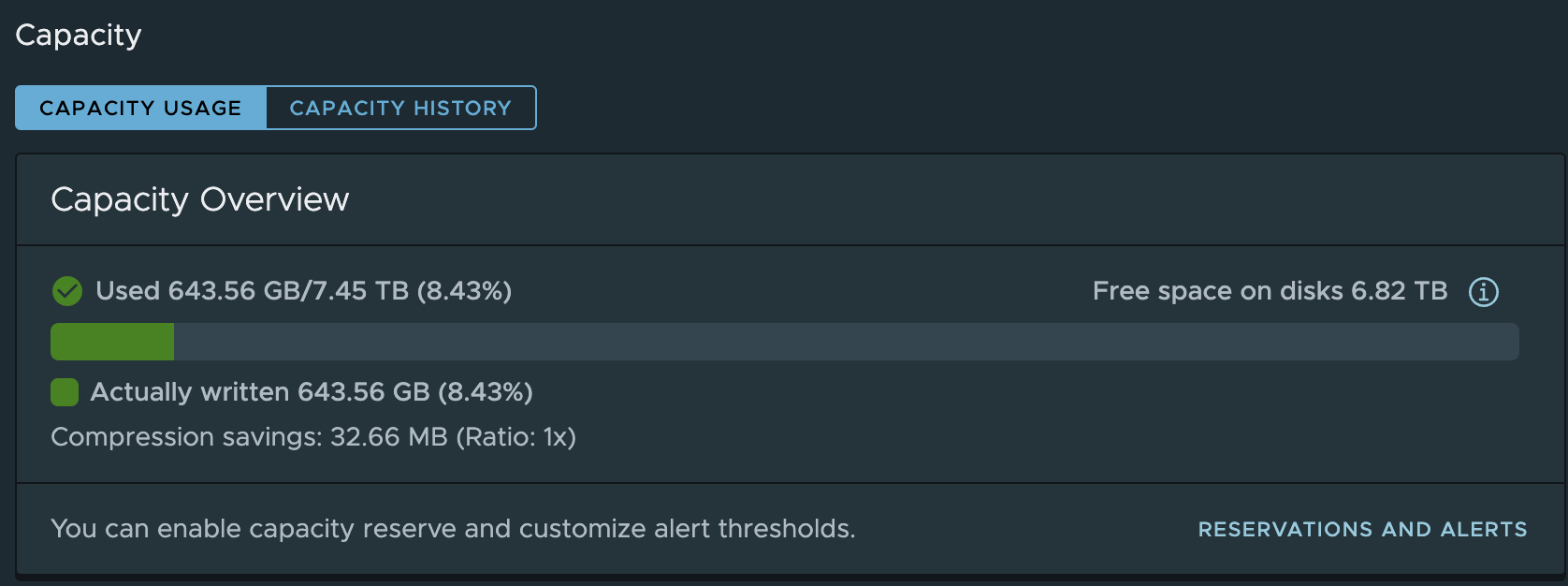

A few minutes later, I have a brand new vSAN 8 ESA up and running!

Skyline Health complains about the unsupported NVMe devices and the network throughput, but that’s to be expected.

Of course, this setup is very much unsupported by VMware.

The USB NIC’s I use are not supported, thankfully the USB NIC Fling is available, but it’s not intended for production use. vSAN ESA also has a recommended minimum network rate of 25 GbE, and not 2.5 GbE as I’m running.

The NVMe devices I use are not on the vSAN ESA HCL or VCG, and to top it off, vSAN ESA also requires a minimum of 4 NVMe devices per host — I have one. So, while this setup works, it is very much a home lab setup.

So there it is, my completely unsupported vSAN 8 ESA configuration*.